Building Tallr: My Personal AI Workflow Tool

The problem I kept running into was that my AI CLI sessions would stop and wait for me, but I didn't know it. I expected the session to still be running, only to come back later and realize it had been sitting there idle, waiting for my response. That wasted a lot of time, and it was also really easy to lose track of which sessions were working and which ones were stuck.

I've only been developing for about three years. When I was studying computer science, coding agents didn't really appear until my last semester. Compared to people who have been in the field for a long time, they can use AI more like a junior developer helping them. For me, it feels more like a peer or even a teacher. That's why I avoid auto-accept mode - I want to read the suggestion, make sure it's good code, and more importantly, learn from it. And that's exactly why it frustrated me when sessions were pending without me knowing. Every one of those moments was a lost chance to review and learn.

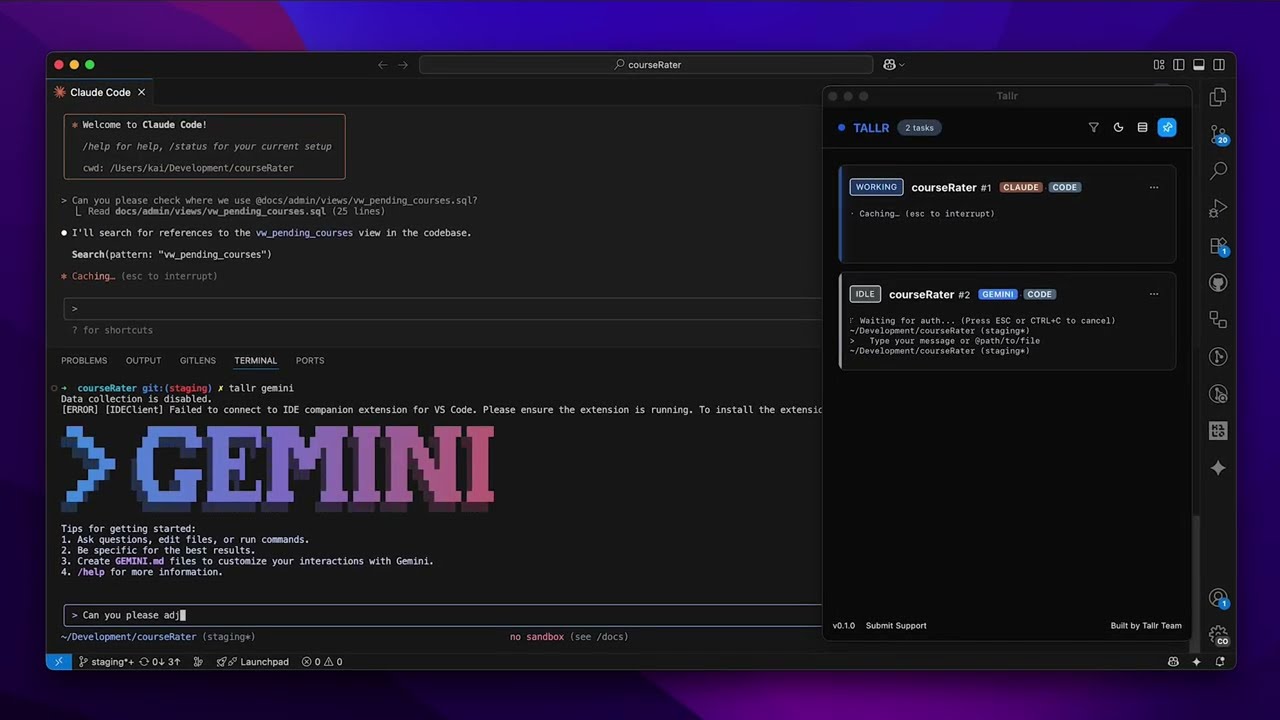

That's when the idea for Tallr was born. The name comes from a "tally light" in a studio setting, where multiple cameras are running and the panel shows which one is live. Tallr does the same thing for my AI sessions - it shows me at a glance which ones are working, which are waiting, and which are idle.

What it does

Basically, Tallr gives you a panel where you can see all your sessions in one place. It can detect the state of each session - whether it's working, waiting for input, or just idle - and it gives you a simple way to keep track of them without bouncing between terminals.

Some of the features I've built in so far:

- Real-time monitoring of AI CLI sessions

- Notifications when attention is needed

- A dashboard with expandable details

- IDE auto-detection (VS Code, Cursor, Zed, JetBrains, Windsurf, etc.)

- Always-on-top window and keyboard shortcuts

It currently works with Claude, Gemini, and Codex.

How I built it

This was my first time using Tauri v2, since my usual stack is React, React Native, TypeScript, and SQL databases. I wanted something lightweight but native, and Tauri turned out to be a good fit. I wrapped each AI CLI in a thin command (tallr claude, tallr gemini, etc.) and then used simple pattern recognition on the terminal output to figure out whether it was running, waiting, or idle.

For example:

- If the output shows something like "escape to interrupt", that usually means the session is actively running, so I treat it as working.

- If the output shows multiple-choice prompts like "yes / no" or "auto accept", that means the session is paused and waiting for user input, so I mark it as pending.

- If it's neither of those, I assume it's idle.

It's really just recognizing patterns with a few regex checks. Not the most sophisticated approach, but it worked well enough for my needs.

Along the way I realized I could have built it by intercepting API calls directly, which probably would've been cleaner and more reliable. But by then I had already gone down the path of pattern recognition, and since this started as a personal project, I decided to ship it anyway. The important part for me was that I learned a lot, and it gave me hands-on experience with Tauri that I wouldn't have gotten otherwise.

Wrapping up

I originally built this just for myself, but I found it surprisingly useful. I posted it on Reddit and people commented that they might want to use it too, which is why I decided to open-source it and share.

👉 You can check it out here: Tallr on GitHub

Support My Work

If you find Tallr useful or want to support more open-source projects like this, consider sponsoring me: